Unintended Consequence

MILWAUKEE – Cities around the country that have installed energy-efficient traffic lights are discovering a hazardous downside: The bulbs don’t burn hot enough to melt snow and can become crusted over in a storm — a problem blamed for dozens of accidents and at least one death.I’d try to attack this problem with eaves and surface coatings rather than heat.

Criticism Is Easy

I presented three computational models for how attention could lead to consciousness. Based on the papers we assigned, one of them had strong support from the data (Taylor’s CODAM), one of them had medium data-based support (Wang’s LEABRA, small amount of data given via Figure 3 here), and one had little to no support from data (Cavanna’s figure 1 here, the “ascending reticular activating system”). We asked the thirteen other students to rank the models at the beginning of the class, with 1 being the best. Taylor’s model was ranked last, with an average rank of 2.21, Wang’s was second best, with an average rank of 2.07, and Cavanna’s was considered the best, with an average rank of 1.71. So, there was an inverse relationship between the amount of data presented between a model and how much people preferred it.I’m sure the students preferred the storytelling of the least-supported theory. But I agree they should prefer the story that is a proven model for actual data, even if it’s less pleasing aesthetically.

It’s probably what everyone has told them to do their whole lives: be critical of everything you read, don’t trust statistics, etc. The better advice would be to simply try to gauge the veracity and utility of any individual claim based on the data you are given and your prior beliefs about the possible bias of the data source.Interpreting data is hard. Most students probably didn’t try to do so.

If people are critical of every new idea they encounter, all that will do is bias them towards favoring the status quo or the null hypothesis. I blame the market failure on our social norms: being critical is too often viewed as automatically synonymous with correct.Lazy skepticism is a necessary filter; too much “research” is being produced and publicized. However, if a trusted expert recommends a paper, I’d be happy to learn about the evidence in it. I’d prefer that they summarize for me the data and the inferences they think should be drawn from it, because I’ll rarely bother to sift through a paper aimed at the clique of academics who specialize in the topic.

Explosive Exercise

EXPLOSIVE EXERCISES IN SPORTS TRAINING: A CRITICAL REVIEW This paper reviews evidence relating to the effectiveness and safety of explosive exercises, such as Olympic style weight lifting, other weight training exercises performed at a fast cadence, and plyometric exercises, that are commonly used in the strength and conditioning training of athletes.Contrary to popular belief and the practices of many athletes, the peer-reviewed evidence does not support the view that such exercises are more effective than traditional, slow and heavy weight training in enhancing muscle power and athletic performance. In fact, such exercises do not appear to be any more effective in this regard than weight training at a relatively slow cadence, and some evidence suggests they are less so. Also, such explosive exercises do not transfer well (if at all) to athletic performance on the sports field, and present a significant injury risk.Nonetheless, I expect that the stereotypical uncoordinated, awkward, muscle-bound bodybuilder really exists to the extent that he has practiced no additional physical skills. Presumably the sport is practiced explosively to the extent that there’s any performance benefit in doing so. People love to win.

Endurance Exercise

which is quite long. Adaptation to both varieties of anaerobic intervals below seems to reach diminishing returns after just 2-3 weeks (6 sessions).

Training anaerobic power is pretty much what you’d imagine. Generally work bouts lasting 30-60 seconds are used with fairly long rest intervals. For a one minute maximum effort, a rest interval of 4-5 minutes of easy recovery might be taken. The idea, essentially is to generate a lot of power (and a lot of waste products, some have even called this lactate production training) during the work bout and then give the body a chance to clear it during recovery. That’s then repeated a number of times (perhaps 8-10 in total depending).

Anaerobic capacity training, as noted above, is more along the lines of repeated longer repeated efforts (60-90 seconds) with rest intervals of perhaps 1:1 (so 60 seconds rest for a 60 second interval, 90 seconds for a 90 second interval). Intensity has to be lower here and the goal is to maintain power output against increasing fatigue (some used to call this lactate tolerance training).

this energy system is not massively trainable. Certainly buffering capacity can be improved (and science continues to understand the adaptations involved) but overall improving the power or capacity of this pathway is relatively limited; you put in a lot of very hard, very draining work for not a lot of payback.

the adaptations to this type of training occur quickly but then stop just as rapidly. Studies find that the improvements to this type of work typically stop after about 3 weeks. In one study of cyclists, 12 HIIT workouts over 6 weeks generated no better improvements in performance than was seen after the first 6 workouts over 3 weeks.

in Effects of Moderate-Intensity Endurance and High-Intensity Intermittent Training on Anaerobic Capacity and VO2 Max a similar pattern was seen: the major effects occurred in the first 3 weeks with far smaller effects occurring in the last three.

the 2000 German track cycling team, preparing for the 4km (an event lasting about 4 minutes); they performed miniscule amounts of anaerobic training and did so for about 8 days prior to the event. They had found that that was all that was required to max out the anaerobic pathways.

the high acid production caused by these types of anaerobic intervals may damage mitochondria and overall endurance especially if it’s not balanced by sufficient low intensity work (Lydiard, for example, was adamant that anaerobic work never be performed while building the aerobic engine). This was an older idea that has seen recent scientific validation: in a recent study, individuals who consumed bicarbonate prior to training obtained better aerobic adaptations, presumably by buffering acidosisAerobic training for VO2 max also reaches diminishing returns in just 3-8 weeks (8 weeks starting from a very weak base).

Interval training, specifically the kind that drives athletes to achieve VO2 max primarily affects heart function. Certainly there are going to be peripheral effects in muscle but the main effect is in heart.

VO2 max can be sustained for 5-8 minutes at absolute maximum, generally VO2 max intervals are performed for anywhere from 2-5 minutes (with 3 minutes being a fairly standard duration). It will generally take about one minute for the body to achieve Vo2 max such that anywhere from 1-4 minutes is performed at VO2 max. With multiple sets, a rather large amount of training at VO2 max levels can be achieved without having to go to complete exhaustion.Rest between aerobic intervals should be 50-100% of the duration of an interval. Steady state aerobic endurance requires and develops an endurance adaptation in muscles

interval training improved Vo2 max far more than long-duration training, it had little to no impact on citrate synthase levels [evidence of aerobic endurance adaptation in muscle]. In contrast, steady state training primarily improved citrate synthase levels with little impact on VO2 max.I’m sure that starting from very low fitness, you could see improvements to both with some intermediate duration. But in already fit people, you really mostly only build one thing at a time (and sometimes that work can actually actively degrade other areas). Steady state means, of course, that you could keep going for a long time (an hour). If you want to adapt faster, you’d work near the threshold of the most intensity you could maintain for that time. It’s probably good to do more sets (throughout the week or back to back) of shorter duration (10-20 min) rather than actually working that hard for an hour. I don’t even want to determine what my limit is for an hour; I’d just work really hard for 10-20 min and then reduce the intensity by a little bit from that benchmark as an estimate of what I could do for an hour.

| Goal | Intensity | Work Interval | Rest Interval |

| Neuromuscular Power | Maximum | 6-15 seconds | Complete (2-5 minutes) |

| Anaerobic Power | Just Below Maximum | 30-45 seconds | 2-5 minutes |

| Anaerobic Capacity | High | 60-90 seconds | 60-90 seconds |

| Aerobic Power | Above maximum steady state | 2-5 minutes | 2-5 minutes |

| Aerobic Capacity | At/near maximum steady state | 8-20 minutes | 4-10 minutes |

Transition

The Quiet War

Air

Pride and Prejudice (2005)

The Beat That My Heart Skipped

Oldboy

Nabokov Revised Too

Shakespeare’s revisions (and Nabokov’s) matter for two reasons. Revision indicated that even these writers shouldn’t be considered godlike figures from whom the muse poured forth perfection on the first try, but writers who are—in some ways—like other writers, in at least this respect: They were subject to second thoughts.

This popped out at me while skimming a fawning piece on Nabokov, who must have died recently.

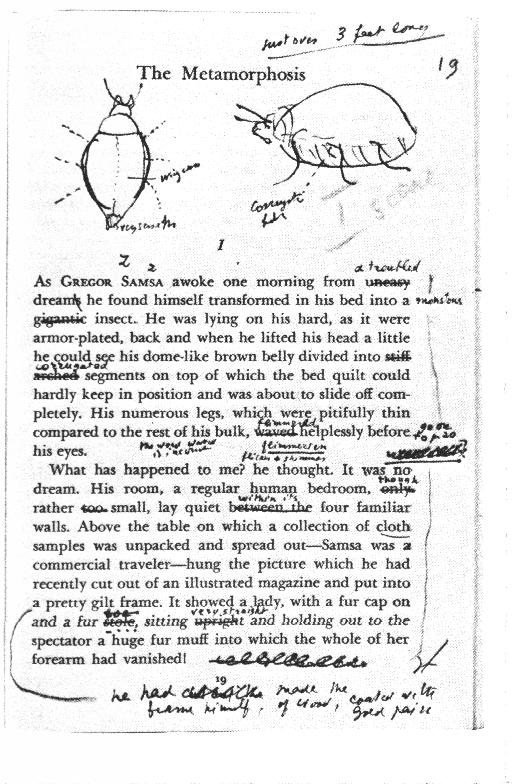

I thought it was well-known that any artistic, musical, literary, mathematical, or engineering genius both used writing to enhance their mental working space, and improved substantially on their work by revision. I’d say whatever exceptions there are (who did it all in their head and submitted only first drafts), could have been better had they edited. If they didn’t edit or redraft, all I’d say is that they didn’t find the activity rewarding, not that it wouldn’t have helped. I’m not saying that the most-revised work is always the best (though Madame Bovary may be excellent; I haven’t read it). It was seeing this edit of Kafka’s work by Nabokov in my RSS reader that started me wondering if Nabokov was dead:On the whole, the edits improve it. I’ve never read Nabokov - but I think I will, soon.

Risk Taking While (No Longer) Drunk

Scientists at the University of Washington (U.W.) in Seattle fed alcohol to a group of rats and found that their ability to make good decisions was impaired even long after they stopped consuming booze.

Researchers found that the tendency to make imprudent decisions did not fade for up to three months after the rats stopped consuming alcohol.(from Scientific American’s report) In many ways, rats are nothing like humans, but I expect that whatever mechanism explains this in rats will also exist in us. The rats weren’t actually visibly drunk or addicted (displaying withdrawal) to the alcohol; they were just always slightly intoxicated for 20 days straight. After ending alcohol consumption, the rats got to play with two reward-dispensing levers; one with a high expected reward but no extremely large “jackpot”, and the other with worse expected payoff, but larger payoff size (in both, I assume there’s some probability of receiving no reward). I wouldn’t expect rats to correctly compute expected reward per trial, but nonetheless that rats who never got drunk preferred the higher-average lower-peak reward, while the drunk rats liked the bigger-jackpot less-rewarding lever, for up to 3 months of soberness. We’ll assume the scientists randomized the positions of the jackpot and consistent levers between trials; otherwise the persistence of preference would be explained by habit.

Dignified With a Response

Ruth Mayo … found that for a substantial chunk of people, the “negation tag” of a denial falls off with time. … explaining why people who are accused of something but are later proved innocent find their reputations remain tarnished. … So is silence the best way to deal with myths? Unfortunately, the answer to that question also seems to be no. Another recent study found that when accusations or assertions are met with silence, they are more likely to feel true

In that vein, Robin Hanson asks what conclusions we should draw when a heavily promoted fringe belief (not accepted by official authorities) isn’t met with any substantial rebuttal. Not only should we not believe fringe advocates just because they’re loud, fervent, and apparently unopposed, we should take the official silence as an implied rebuke. I often wonder: when is it worth my effort to undertake my own investigation of the merits of the fringe argument, in depth sufficient that I could find the confidence to overcome the strong prior against them by nature of their fringe status? In most cases, either the fringe claim is so incredible, or the advocates so flawed, that there’s no temptation. In some cases, the payoff for correctly adopting a fringe belief seems low (if it’s true that excessive milk drinking is harming me, it probably isn’t by very much), even if the cost of gaining the information needed to decide it is modest. Prof. Hanson also suggests that when there is serious opposition to an idea, the fact that the opponents are diverse in their reasons and premises, is not evidence against them. For example, people sometimes advocate racial (among other types of) profiling for the reason that it’s rational to avoid considering certain groups when you want to find a qualified person of any group as easily as possible, but Robin and others want to argue that it’s unfair and breeds crime, for not enough benefit. The argument for profiling is simpler and clearer, but since most of us don’t want to allow profiling, we should be consistent and not generally take “confusion in the opposition” as evidence.

A Foolish Consistency

LessWrong discussion

Nerds are nuts

Crash (a Kind of Movie Review)

crash: a pretty, fun, full-of-crap movie, with some powerfully acted moments (spoilers follow)

everywhere: tarantino-esque supposed to be funny-because-it’s-shocking racist repartee. the absolute low: “how did those two cultures (el salvadorian and puerto rican?) get together and decide to park their cars on the grass?”, said to his girlfriend in a fit of blue-balled pique.

coincidence #1: opening crash - cut to dazed detective getting out and finding his dead brother’s shoe on the side of the road

coincidence #2: molester cop randomly encounters molested woman next day and pulls her from her car just before it explodes (i was really pulling for him! it was a tasteful molestation, so he turned out ok)

coincidence #3: molester cop’s ex-partner also randomly encounters molested woman’s partner the same day and saves his life after he flips out and fights back against carjackers, then, continuing to run amok, attempts suicide-by-cop in a delayed reaction to his doormat-like acquiescence to her molestation-by-cop.

coincidence #4: one of the carjacker who failed in #3, later that night while hitchhiking ends up shot by the #3 lifesaving cop - while not emotionally significant, it’s still improbable that they would both have an independent relationship to the same car

obvious: evil HMO refuses to check molester’s dad for prostate cancer

obvious: imaginary bullet-proof cloaks and a blank ammunition

obvious: ludacris ironically railing against mumbling rappers

annoying: DA assistant’s sleazy/glib blackmail presentation

annoying: if you don’t want to rock the LAPD boat in order to leave your racist partner, you have to publicly claim uncontrollable flatulence

annoying: knowing the leaking gasoline would eventually reach the flame. (a person or a blanket would have easily been able to stop that flow)

retarded: molested woman’s partner brawling with two armed carjackers (we’re to understand that said carjackers, who earlier told us they would never steal from black people, won’t shoot)

retarded: detective’s brother / ludacris’ carjacking partner getting himself shot and tragically having an idol of St. Somebody in his hand rather than a gun. i would have shot him too.

retarded: giving ludacris back his gun and telling him he embarrasses you

true and sad: producer telling above partner (also a TV director) to direct a black actor to “talk more black”

horrible person: hmo bureaucrat who admits she’s making prostate dad suffer because his molester son is racist

horrible person: persian father who was enough of a dick that i was happy his store was vandalized in possible retaliation

horrible person: detective’s mother. gross. her son is a saint for not raging against her.

horrible person: DA’s wife. disgusting in every way. her quasi-redemption did nothing for me - when she realizes she is angry all the time for no reason, when she realizes that her maid (who should hate her but is too saintly) is the only friend she has, i think “yes, you are horrible, and your husband treats you better than you deserve”

possibly horrible person: DA, because presumably he knows what his blackmailing subordinate did. “i need to pin a medal on a black person so i still get votes from people after i was carjacked by black people” was just stupid, not evil. it’s part of the morality of the film that as a politician, he must be tainted by evil, but i do enjoy his calm command. he seems reasonable to me.

horrible person: ludacris’ carjacking partner.

horrible person: ludacris, until he’s conveniently redeemed by setting free the smuggled vietnamese locked in the back of the van he stole because he remembered it from the night before he hit-and-nearly-ran the night prior in the car he stole from the DA

horrible person: molester cop until he’s conveniently redeemed by heroically pulling the woman he molested from a car just prior to its explosion

nice person: locksmith (and his family), whether or not he vandalized the persian father who abused him and cheated him. horrible if in handing down invisible bulletproof cloak he scarred his daughter for life after he was shot (thanks, blanks) - which i was waiting for the entire movie.

nice person: persian daughter who bought the blanks

nice person: detective, provided he reneges on his deal to withhold exculpatory evidence now that his brother is dead

nice person: director who let his wife be molested.

nice person: not-racist cop, who rightfully shot his crazy hitchhiker (but he could have done MORE! we ALL could do MORE!)

overall: about the 300th best movie i’ve watched. pretty and well-acted. if you liked crash, magnolia does it better (equally believable and with less moralizing)

Primitive Scala

A lower level (Scala) solution to this puzzle:

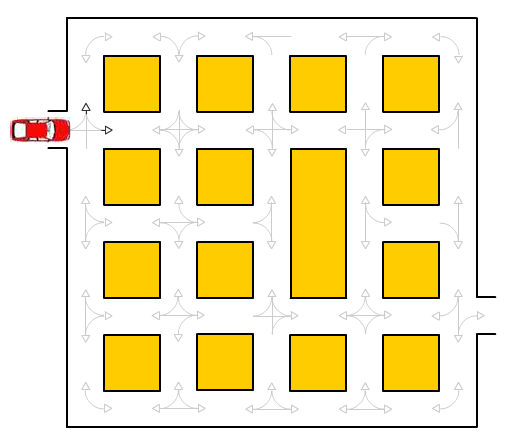

There’s a path from the car to the exit on the right following the lines at corners, and emerging only at arrowheads. This is just a directed graph. The two directions on a street segment are independent nodes. I stole from Daniel Sobral the text representation of the graph, and implemented breadth first (shortest path) search just like he did. The difference is that I explicitly represent the directed graph, and I do so in typical programming contest style. He has a nice ASCII art printout; I just used simple debug prints to verify that I built the graph right.

final case class Roadmap(rows: Int,cols: Int) {

type Onto=Array[Int] // adjacency list

type Roads=Array[Onto] // vertices (unidirectional road segments)

val RC=rows*cols

val ndir=4

val goal=RC*ndir

val DRC=RC*ndir

val roads=new Roads(DRC) // one dimensional array allows for simpler adjacencies at the cost of uglier addressing

/* separate road segment for each direction right left down up (0 1 2 3)

so that (row,col) is the location of the upper/left corner of that segment (be consistent!)

*/

def index(rldu: Int,row: Int, col: Int) = RC*rldu+row*cols+col

def coord(i: Int) = { val rldu=i/RC;val r=i%RC;(rldu,r/cols,r%cols) }

val R=0

val L=1

val D=2

val U=3

val NONE=4

def c2dir(a: Char) = a match {

case ‘R’ => R

case ‘L’ => L

case ‘D’ => D

case ‘U’ => U

case _ => NONE

}

def dir2c(d: Int) = “RLDUN”(d)

def coordname(t: (Int,Int,Int))=t match {case (rldu,row,col) => “%c%d%d”.format(dir2c(rldu),row,col)}

def i2s(i: Int)=coordname(coord(i))

/* set the transitions that meet in the intersection on row r, col c.

exits(dir) if there’s an arrowhead; undir is a list of pairs of linked dirs */

def setCorner(r: Int, c: Int, exits: Array[Boolean], undirs: Array[(Int,Int)], goaldir: Int) {

// remember, r,c gives the upper/left part of road

val froms=Array(index(L,r,c),index(R,r,c-1),index(U,r,c),index(D,r-1,c))

// to approach from the right, you head left. froms and tos are identical except opposite directions

val tos= Array(index(R,r,c),index(L,r,c-1),index(D,r,c),index(U,r-1,c))

val ways= Array.fill(ndir)(new collection.mutable.ArrayBuffer[Int](ndir))

def checkdir(from: Int,to: Int) {

if (goaldir==to) ways(from)+=goal

else if (exits(to)) ways(from)+=tos(to)

}

for ((from,to) checkdir(from,to)

checkdir(to,from)

}

for ((f,w) if (w.size>0) roads(f)=w.toArray

// since we didn’t pad the border, we’ve considered indices that are off the array.

// user input must not ask us to move from somewhere off the array

// a padded border would prevent this, and allow a single start and goal state

}

}

def s2dirs(s: String) = (c2dir(s(0)),c2dir(s(1)))

def readCorner(r: Int, c: Int, desc: String) {

val a=desc.trim split “:”

val exits=new

Array[Boolean](ndir)

var lastdir=NONE

var goaldir=lastdir

for (

c if (c==’!’) goaldir=lastdir

else {

val d=c2dir(c)

if (d!=NONE) {

exits(d)=true

lastdir=d

}

}

}

setCorner(r,c,exits,a(1).trim split ” ” map s2dirs toArray,goaldir)

}

def read(s: String) = {

var r=0

for ((line,row)=0) zipWithIndex) {

for ((s,col) readCorner(row,col,s)

}

}

}

def path2goal(starts: Int*) = {

type Path=List[Int]

val q=new collection.mutable.Queue[Path]

val seen=new Array[Boolean](DRC)

starts foreach (seen(_)=true)

val s=starts map (x=>List(x))

q.enqueue(s: _*)

var p=q.head

while (p.head != goal) {

val

h=p.head

seen(h)=true

val n=roads(h) map (_::p)

q enqueue (n: _*)

p=q dequeue

}

p

}

def prettypath(p: List[Int]) = p reverseMap i2s

} object car {

/* from http://dcsobral.blogspot.com/2009/09/puzzle.html */

val graphString = “”“|

|DR: DR, RLD: RD LD, L: DL LR, LR: DR LR, DL: DL

|UR: LU RU DU LR, LRD: LR UD LU RU LD RD, UDLR: UD LR UR LD, UDLR: UD LR RD, UL: UD UL

|UDR: UD RU RD, UDLR: LR LD DR RU, UD: UD UL DL, UDR: UD UR, UD: UD LD

|UDR: UD RU RD, UDLR: UR UL LR RD, UR: UD LR LU RU DR, ULR: LR UR UL DR DL, UDLR!: UD UL DR

|UR: UR, ULR: UL UR LR, ULR: UL UR RL, ULR: UL UR RL, UL: UL

|”“”.stripMargin

def main(args: Array[String]) {

val r=Roadmap(5,5)

r.read(graphString)

println(r.prettypath(r.path2goal(r.index(r.R,1,0),r.index(r.U,0,0))))

}

}

The format is explained on Daniel’s blog, but I found it odd enough that I’ll mention that it’s a matrix of intersections with EXITS: UNDIRECTED_ADJACENCIES. EXITS is the list of directions that have a departing arrowhead. The adjacencies are undirected to save typing (UR means both UR and RU). I added a ! following the arrowhead if it leads to the goal, since I didn’t want to hard code the exit location.

(SPOILER: R10, R11, D12, D22, R32, U23, U13, U03, R03, D04, L13, D13, R23, D24, D34, L43, L42, L41, L40, U30, U20, R20, D21, L30, D30, R40, R41, R42, R43, U34, where 32 means the road segment with its upper left at row 3 and column 2)

Tree Pattern Matching

Fast Translation Rule Matching for Syntax-based Statistical Machine Translation

in EMNLP09 (Zhang et. al) gives a practical method for top-down enumerating tree pattern matches against the root of a forest (compact regular set of trees). This problem arises in MT when you first context-free-parse your source material, and then transform its syntax tree into another language - since parsers aren’t perfect, you translate all the likely parses rather than just the most likely, and pick the most likely and fluent output. Apparently the baseline pattern matching methods used are really terrible:

- EITHER: Up to the largest rule size/shape iterate over the (exponential in that size) many forest-topping label sequences, checking each against a rule label sequence hash.

- OR: Iterate over all the rules, checking each one independently against the forest.

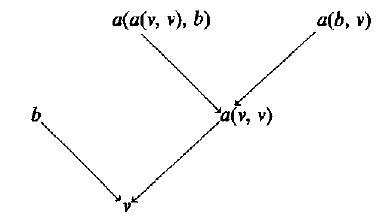

They give an improvement to the latter approach: if you’ve already matched the first 3 levels of a 4-height rule before, don’t repeat the work. (The diagrams are pretty good; refer to them if you want to make sense of what follows). This is 20 times faster, and makes their overall MT system twice as fast. If you’re actually using one of the above horrible baseline approaches, you would do well to emulate this paper. I suspect that people are in practice using a hybrid of the two: exhaustively unpack the forest for a set of (small) top-of-tree-shapes e.g. x(x(x,x),x), retrieving potential rule sets by labels-hash, and then iterating over the resulting subset. Unfortunately, they only compare their performance against the two (stupid) extremes. I’ll also note that it always makes sense to (reversibly) binarize both the forest and the tree patterns in the same way, if you haven’t already, e.g. A(B,C,D) binarized would be: A(B,C_D(C,D)) where C_D is a virtual nonterminal that only appears in the context C_D(C(…),D(…)).

The avoidance of (some) repeated work is accomplished by 1) organizing tree patterns (rule left hand sides) to reflect these shared upper levels and 2) performing exhaustive top-down breadth-first expansion of pairs of (pattern state, forest states - one for each leaf in pattern state), where the forest states tuple may grow exponentially with depth. Since we’re interested in the subforests aligned to the leaves of our tree pattern, we would take the forest states for pattern states corresponding to complete patterns, and perform some computation (MT in this case) on the subforests + pattern - we don’t just want the set of matching tree patterns. This paper could have been clearer and shorter. The pseudocode has quirks. It also seems to cite without reading: Decoding Complexity in Word-Replacement Translation Models (Knight 1999) does not show MT in general is NP-hard, only that IBM Model 1 (one of the simplest word to word translation models, with very free word reordering) is. MT based on parsing, without global features, is actually polynomial (albeit high degree if you have e.g. target 5gram probabilities). Even though the forests arising from a parser will have the same root label for all trees in a state (e.g. NP covering words 1-2 has only NPs), I prefer the regular tree grammar formalism for a set of trees, where a grammar state can have production with different tree roots. This paper handles the case where each state has a single unique tree root, since that’s what their parser gives them. A general RTG production of rank k would need to be unpacked into N^k productions to achieve this, where N is the size of the tree label alphabet. It’s better to modify your algorithm to use (root-label,forest-state) tuples instead of just (forest-state) whenever you want to look at only same-root trees. I’m using “state” instead of the usual “nonterminal” to avoid confusion with the labels of the parse tree (in parsing, NP is a called a “nonterminal” of the tree NP(NN(dog))) since it’s not a “terminal”, or leaf). Everything in the paper is called “hyper-X”. There’s even a section called “hyper-node, hyper-path and hyper-tree”. I appreciate the enthusiasm, but these are not great names, especially since they’re (misleadingly) suggestive of vertex/node, hyperpath, and hypertree in hypergraphs. The forest is also given as a set of “hyper-edges” (without definition, but obviously they mean a directed B-hyperedges with ordered tails). Obviously if the parse forest has probabilities attached to productions, you will have accumulated those in the process of finding a particular match. The authors could have cited, for the related problem of matching a set of tree patterns against a single tree, Pattern Matching in Trees (Hoffman and O’Donnell 1982). That work deals with ranked tree labels (doesn’t provide a way to say “subtree rooted in a NP of any arity”; you’d have to have NP(x) NP(x,x) NP(x,x,x) …), but that’s always a trivial change. As far as I can tell, it’s impossible to use the top-down matching scheme in that paper efficiently on forests. Hoffman and O’Donnell provide a helpful framework: the (immediate) subsumption graph of tree patterns. This is a directed acyclic graph with edges from a to b if a>b (a>b (“subsumes”) iff \forall f a(f) => b(f)), where I’ve used a and b as predicates over trees or tree-sets f. Immediate just means that only the necessary edges are kept (subsumption is transitive). In the immediate subsumption graph pictured below, v means a variable, i.e. label can be anything:Top-down matching procedures that report each assignment of subforests to tree pattern variables exactly once, will need to turn the subsumption graph into a tree. Most likely you’ll want to do this in advance. I’ve done this in a depth-first leftmost-expansion fashion by turning each tree pattern into a string, and combining the results into a trie with branching choice of “where to expand next”, implicitly giving a tree subgraph of the immediate subsumption graph (unpublished work). Since I allow tree labels to have variable arity, I separate the choice of a label and its arity (the latter may never be specified). My algorithm for matching this subsumption subgraph against a forest is the same as Zhang et. al, except that they’re breadth first, and they (unnecessarily) expand the whole level at once. That is, their subsumption subgraph is not immediate. They lose some sharing as a result, although it would be easy to fix this. You can devise pattern sets that make a particular subsumption graph->tree mapping perform badly, and I don’t have any intuition as to whether theirs or mine will perform better on particular sets (e.g. MT rules containing most naturally occurring tree fragments up to a fixed size or depth). The lost sharing from top-down matching any particular subsumption tree can be avoided entirely with bottom-up matching. Hoffman+O’Donnell give a method for building a bottom-up deterministic tree recognizer, which is certainly optimal with respect to sharing and probably time if you can afford to precompute and store the (possibly exponential) monster. Bottom up tree recognizers can match against forests beautifully, since regular tree grammars are closed under intersection. Unlike top-down matching, the result of bottom-up matching would just be a set of rule (fragments) for each forest node. To get the subforests, you’d have to traverse top-down (this should be pretty fast, since you’re constrained by a specific tree pattern). In a future post, I’ll describe a bottom-up patterns against forest (or tree) matching that doesn’t explicitly determinize beforehand. I’ll also note that the type of sharing you get in bottom up matching is avoiding work in repeatedly matching subtrees that are contained in different rules. On the other hand, subsumption avoids repeated work in matching shared tops of trees. That is, a pattern that subsumes another is always larger (turning some leaves into full subtrees). Also, although I haven’t yet read it, A Simple Tree Pattern-Matching Algorithm claims to improve Hoffman+O’Donnell. It’s unlikely that this would be useful for forest matching.

EMNLP MT System Combination Papers

System combination can give large improvements in MT quality, but in practice you have to ask people to translate material for you on their system.

Duan et al. (Feature Subspace Method for SMT System Combination) take a single system and create a dozen variants of it (each leaving out a single feature, or using a lower-order language model), and gain about 1 BLEU by doing sentence-level rescoring using ngram-agreement and internal model features (weights trained on a dev set). This is more than the modest 0.25 BLEU I’ve obtained by doing the same thing for a single system’s nbests; they didn’t report their single-system reranking gain but it’s probably similar. When combining two actual systems (Hiero-style and phrase-based), they have to leave out the internal features (which are difficult or impossible to evaluate on an unrelated system output), but still get an additive ~1 BLEU when using 2*12 than 2*1 systems. They also found that 20-50 nbests were best (this agrees with my experience in sentence reranking, but obviously that’s dependent on the search errors, duplicate derivations, and other properties of the actual system and search procedure; sometimes I’ve found a small benefit from up to 1000-bests). This is an excellent result and easy (if tedious) to apply to your own system(s). I don’t find their theoretical motivation particularly compelling. It seems to me that all the benefits are explained in terms of Bayesian averaging over multiple feature-weight vectors.

Feng et al. (Lattice-based System Combination for SMT) take the nice word-level (“sausage graph”) confusion network IHMM system combination method of Xiadong He, and take the obvious step of generating lattices. They get another 0.5-1 BLEU over his result. They build the lattice from word to word alignments against a backbone translation, as in the CN case, so it’s impressive that they get a meaningful lattice. My own thought would be to have the MT systems output alignment information directly, but their way makes integration easier. It’s also possible to get much of the benefit from a lattice’s phrases by using system preferences for ngrams as a feature on top of the unigram CN; Zhao & He get almost identical improvement by doing so (they also add the usual ngram-agreement reranking feature, where you treat your weighted nbest as an English LM, into their CN decoder, which is smart). If you have an existing CN system, it’s probably easier to do this than switch to lattices (there’s no reason not to try to combine the methods, but they do very similar things already). He & Toutanova (Joint Optimization for MT Sytem Combination) also amend He’s original approach, and get a larger improvement (1.5 BLEU). The original CN IHMM approach was a pipeline of several greedy/hard decisions; this work fixes that by jointly searching possible reorderings/alignments/selections of phrases from the nbest translations. There’s probably not any way to “add lattices” on top of this; there’s no explicit backbone, and they already use bigram system voting. I’d try new features before considering lattices (e.g. nbest ngram LM, 3gram voting). They show that the integrated alignment search is important; they lose 0.3 BLEU if they restrict the search to Viterbi union alignments.

A Wrong “Alternative” 0/1 Knapsack Solver

That already causes some sub-optimal solutions. I fixed that, but that only reduced the frequency of wrong solutions. For the input:// Initial guess: the knapsack for wt-1. bestVal[wt] = bestVal[wt-1];

204The “alternative solution” given is:

9

2 2

81 81

82 82

182 182

142 142

11 11

31 31

61 61

101 101

(142, 142)But this is better:

(61, 61)

Total weight: 203

Total value: 203

(31+61+101 = 204)The problem: the “alternative” solution has already used 31 in summing to (204-31), 61 in summing to (204-61), and 101 in summing to (204-101). If the input is ordered differently, this may not happen - all 3 of those coincidences need to occur for the algorithm to fail. The algorithm wrongly commits to just one way of reaching a given sum, unlike the standard (correct) solution.